1.1 THE METHOD OF CHARACTERISTICS.

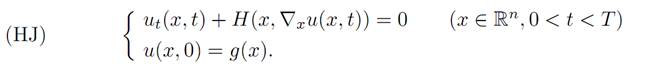

Assume H : Rn × Rn → R and consider this initial–value problem for the Hamilton–Jacobi equation:

A basic idea in PDE theory is to introduce some ordinary differential equations, the solution of which lets us compute the solution u. In particular, we want to find a curve x(.) along which we can, in principle at least, compute u(x, t).

This section discusses this method of characteristics, to make clearer the connections between PDE theory and the Pontryagin Maximum Principle.

NOTATION.

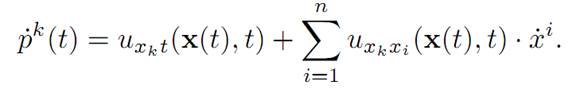

Derivation of characteristic equations. We have

pk(t) = uxk (x(t), t),

and therefore

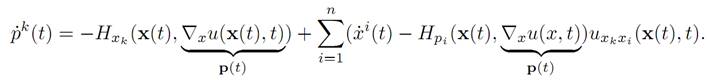

Now suppose u solves (HJ). We differentiate this PDE with respect to the variable xk:

Let x = x(t) and substitute above:

We can simplify this expression if we select x(.) so that

x˙i (t) = Hpi (x(t), p(t)), (1 ≤ i ≤ n);

then

p˙k(t) = −Hxk (x(t), p(t)), (1 ≤ k ≤ n).

These are Hamilton’s equations, already discussed in a different context in §4.1:

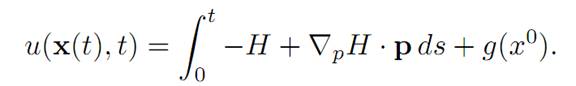

We next demonstrate that if we can solve (H), then this gives a solution to PDE (HJ), satisfying the initial conditions u = g on t = 0. Set p0 = ∇g(x0). We solve (H), with x(0) = x0 and p(0) = p0. Next, let us calculate

Note also u(x(0), 0) = u(x0, 0) = g(x0). Integrate, to compute u along the curve x(.):

This gives us the solution, once we have calculated x(.) and p(.).

1.2 CONNECTIONS BETWEEN DYNAMIC PROGRAMMING AND

THE PONTRYAGIN MAXIMUM PRINCIPLE.

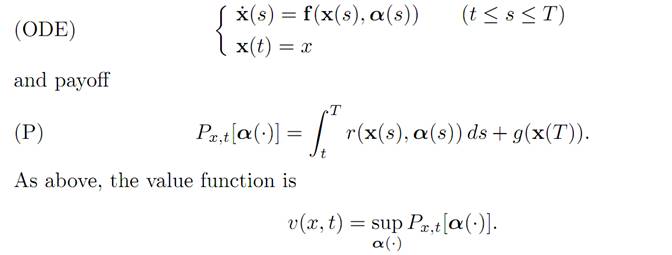

Return now to our usual control theory problem, with dynamics

The next theorem demonstrates that the costate in the Pontryagin Maximum Principle is in fact the gradient in x of the value function v, taken along an optimal trajectory:

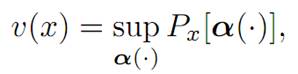

THEOREM 1.1 (COSTATES AND GRADIENTS). Assume α∗(.), x∗(.) solve the control problem (ODE), (P).

If the value function v is C2, then the costate p∗(.) occuring in the Maximum Principle is given by

p∗(s) = ∇xv(x∗ (s), s) (t ≤ s ≤ T).

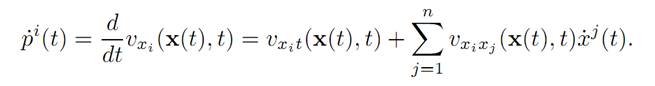

Proof. 1. As usual, suppress the superscript *. Define p(t) := ∇xv(x(t), t).

We claim that p(.) satisfies conditions (ADJ) and (M) of the Pontryagin Maximum Principle. To confirm this assertion, look at

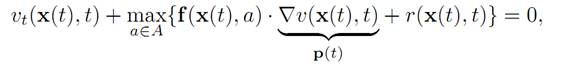

We know v solves

and, applying the optimal control α(), we find:

vt(x(t), t) + f (x(t),α(t)) . ∇xv(x(t), t) + r(x(t),α(t)) = 0.

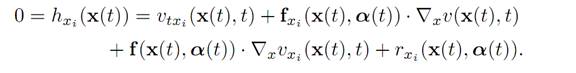

2. Now freeze the time t and define the function

h(x) := vt(x, t) + f (x,α(t)) .∇xv(x, t) + r(x,α(t)) ≤ 0.

Observe that h(x(t)) = 0. Consequently h(.) has a maximum at the point x = x(t); and therefore for i = 1, . . . , n,

Substitute above:

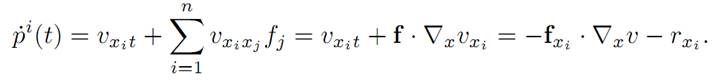

Recalling that p(t) = ∇xv(x(t), t), we deduce that

p˙ (t) = −(∇xf )p − ∇xr.

Recall also

H = f . p + r, ∇xH = (∇xf )p + ∇xr.

Hence

p˙ (t) = −∇xH(p(t), x(t)),

which is (ADJ).

3. Now we must check condition (M). According to (HJB),

and maximum occurs for a = α(t). Hence

and this is assertion (M) of the Maximum Principle.

INTERPRETATIONS. The foregoing provides us with another way to look at transversality conditions:

(i) Free endpoint problem: Recall that we stated earlier in (PONTRYAGIN MAXIMUM PRINCIPLE) that for the free endpoint problem we have the condition

(T) p∗ (T) = ∇g(x∗ (T))

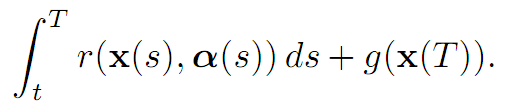

for the payoff functional

To understand this better, note p∗(s) = −∇v(x∗(s), s). But v(x, t) = g(x), and hence the foregoing implies

p∗ (T) = ∇xv(x∗ (T), T) = ∇g(x∗ (T)).

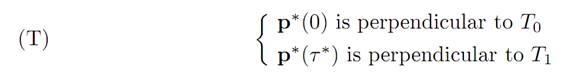

(ii) Constrained initial and target sets:

Recall that for this problem we stated in Theorem ((MORE TRANSVERSALITY CONDITIONS)) the transversality condi tions that

when τ ∗ denotes the first time the optimal trajectory hits the target set X1.

Now let v be the value function for this problem:

with the constraint that we start at x0 ∈ X0 and end at x1 ∈ X1 But then v will be constant on the set X0 and also constant on X1. Since ∇v is perpendicular to any level surface, ∇v is therefore perpendicular to both ∂X0 and ∂X1. And since p∗ (t) = ∇v(x∗ (t)), this means that

References

[B-CD] M. Bardi and I. Capuzzo-Dolcetta, Optimal Control and Viscosity Solutions of Hamilton-Jacobi-Bellman Equations, Birkhauser, 1997.

[B-J] N. Barron and R. Jensen, The Pontryagin maximum principle from dynamic programming and viscosity solutions to first-order partial differential equations, Transactions AMS 298 (1986), 635–641.

[C1] F. Clarke, Optimization and Nonsmooth Analysis, Wiley-Interscience, 1983.

[C2] F. Clarke, Methods of Dynamic and Nonsmooth Optimization, CBMS-NSF Regional Conference Series in Applied Mathematics, SIAM, 1989.

[Cr] B. D. Craven, Control and Optimization, Chapman & Hall, 1995.

[E] L. C. Evans, An Introduction to Stochastic Differential Equations, lecture notes avail-able at http://math.berkeley.edu/˜ evans/SDE.course.pdf.

[F-R] W. Fleming and R. Rishel, Deterministic and Stochastic Optimal Control, Springer, 1975.

[F-S] W. Fleming and M. Soner, Controlled Markov Processes and Viscosity Solutions, Springer, 1993.

[H] L. Hocking, Optimal Control: An Introduction to the Theory with Applications, OxfordUniversity Press, 1991.

[I] R. Isaacs, Differential Games: A mathematical theory with applications to warfare and pursuit, control and optimization, Wiley, 1965 (reprinted by Dover in 1999).

[K] G. Knowles, An Introduction to Applied Optimal Control, Academic Press, 1981.

[Kr] N. V. Krylov, Controlled Diffusion Processes, Springer, 1980.

[L-M] E. B. Lee and L. Markus, Foundations of Optimal Control Theory, Wiley, 1967.

[L] J. Lewin, Differential Games: Theory and methods for solving game problems with singular surfaces, Springer, 1994.

[M-S] J. Macki and A. Strauss, Introduction to Optimal Control Theory, Springer, 1982.

[O] B. K. Oksendal, Stochastic Differential Equations: An Introduction with Applications, 4th ed., Springer, 1995.

[O-W] G. Oster and E. O. Wilson, Caste and Ecology in Social Insects, Princeton UniversityPress.

[P-B-G-M] L. S. Pontryagin, V. G. Boltyanski, R. S. Gamkrelidze and E. F. Mishchenko, The Mathematical Theory of Optimal Processes, Interscience, 1962.

[T] William J. Terrell, Some fundamental control theory I: Controllability, observability, and duality, American Math Monthly 106 (1999), 705–719.

الاكثر قراءة في نظرية التحكم

الاكثر قراءة في نظرية التحكم

اخر الاخبار

اخر الاخبار

اخبار العتبة العباسية المقدسة