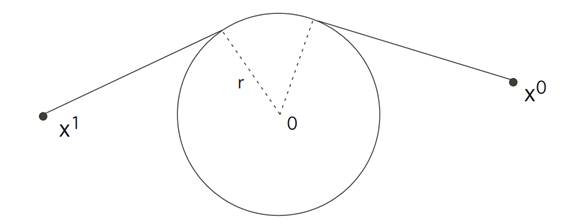

1.1 EXAMPLE 1: SHORTEST DISTANCE BETWEEN TWO POINTS, AVOIDING AN OBSTACLE.

What is the shortest path between two points that avoids the disk B = B(0, r), as drawn?

Let us take

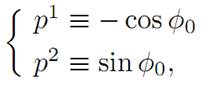

for A = S1, with the payoff

We have

H(x, p, a) = f . p + r = p1a1 + p2a2 − 1.

Case 1: avoiding the obstacle. Assume x(t) ∉ ∂B on some time interval.

In this case, the usual Pontryagin Maximum Principle applies, and we deduce as before that

Hence

(ADJ) p(t) ≡ constant = p0.

Condition (M) says

The maximum occurs for α = p0/|p0|. Furthermore,

and therefore α. p0 = 1. This means that |p0| = 1, and hence in fact α = p0. We have proved that the trajectory x(.) is a straight line away from the obstacle.

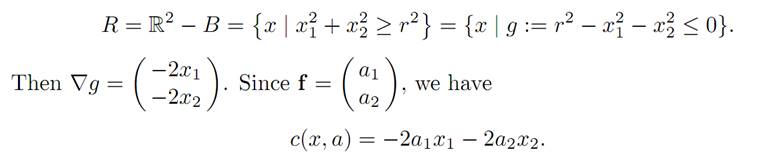

Case 2: touching the obstacle. Suppose now x(t) ∈ ∂B for some time interval s0 ≤ t ≤ s1. Now we use the modified version of Maximum Principle, provided by Theorem (MAXIMUM PRINCIPLE FOR STATE CONSTRAINTS).

First we must calculate c(x, a) = ∇g(x) . f (x, a). In our case,

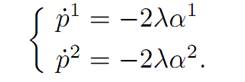

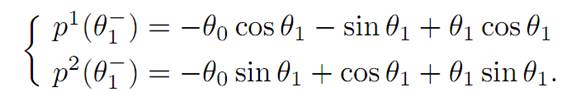

Now condition (ADJ′) implies

p˙ (t) = −∇xH + λ(t)∇xc;

which is to say,

(1.1)

(1.1)

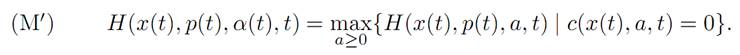

Next, we employ the maximization principle (M′). We need to maximize

H(x(t), p(t), a)

subject to the requirements that c(x(t), a) = 0 and g1(a) = a21 + a22 − 1 = 0, sinceA = {a ∈ R2 | a21 + a22 = 1}. According to (M′′) we must solve

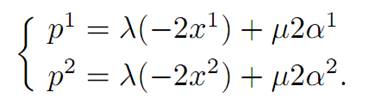

∇aH = λ(t)∇ac + μ(t)∇ag1;

that is,

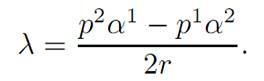

We can combine these identities to eliminate μ. Since we also know that x(t) ∈ ∂B, we have (x1)2 + (x2)2 = r2; and also α = (α1, α2)T is tangent to ∂B. Using these facts, we find after some calculations that

(1.2)

(1.2)

But we also know

(1.3) (α1)2+ (α2)2= 1

and

H ≡ 0 = −1 + p1α1+ p2α2;

hence

(1.4) p1α1+ p2α2≡ 1.

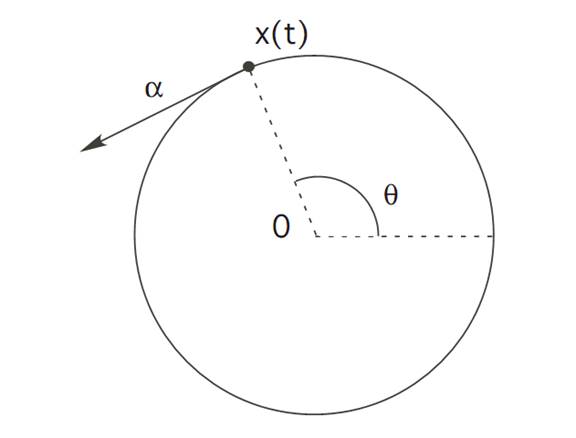

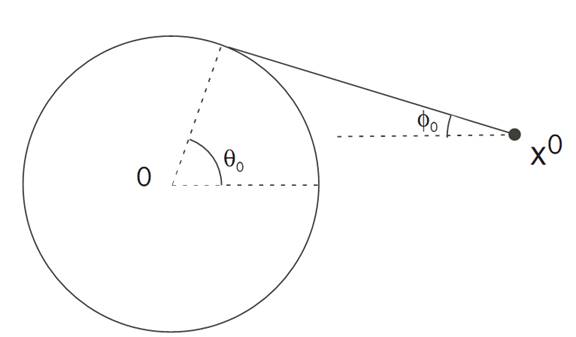

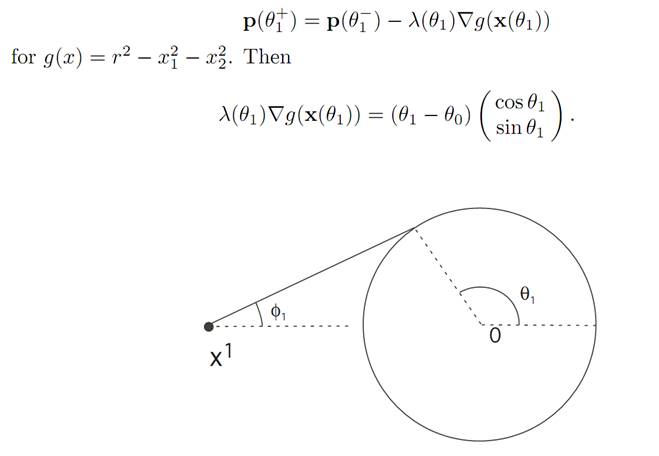

Solving for the unknowns. We now have the five equations (1.1) − (1.4) for the five unknown functions p1, p2, α1, α2, λ that depend on t. We introduce the angle θ, as illustrated, and note that d/dθ = r d/dt. A calculation then confirms that the solutions are

for some constant k.

Case 3: approaching and leaving the obstacle. In general, we must piece together the results from Case 1 and Case 2. So suppose now x(t) ∈ R = R2 – B for 0 ≤ t < s0 and x(t) ∈ ∂B for s0 ≤ t ≤ s1.

We have shown that for times 0 ≤ t < s0, the trajectory x(.) is a straight line.

For this case we have shown already that p = α and therefore

for the angle φ0 as shown in the picture.

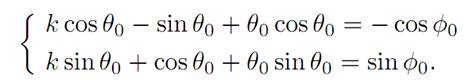

By the jump conditions, p(.) is continuous when x(.) hits ∂B at the time s0, meaning in this case that

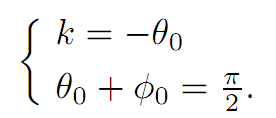

These identities hold if and only if

The second equality says that the optimal trajectory is tangent to the disk B when it hits ∂B.

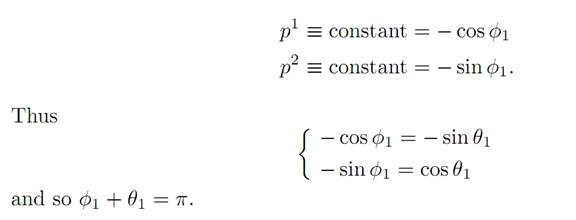

We turn next to the trajectory as it leaves ∂B: see the next picture. We then have

Now our formulas above for λ and k imply

The jump conditions give

Therefore

and so the trajectory is tangent to ∂B. If we apply usual Maximum Principle after x(.) leaves B, we find

CRITIQUE. We have carried out elaborate calculations to derive some pretty obvious conclusions in this example. It is best to think of this as a confirmation in a simple case of Theorem (MAXIMUM PRINCIPLE FOR STATE CONSTRAINTS)., which applies in far more complicated situations.

1.2 AN INVENTORY CONTROL MODEL. Now we turn to a simple model for ordering and storing items in a warehouse. Let the time period T > 0 be given, and introduce the variables

x(t) = amount of inventory at time t

α(t) = rate of ordering from manufacturers, α ≥ 0,

d(t) = customer demand (known)

γ = cost of ordering 1 unit

β = cost of storing 1 unit.

Our goal is to fill all customer orders shipped from our warehouse, while keeping our storage and ordering costs at a minimum. Hence the payoff to be maximized is

We have A = [0,∞) and the constraint that x(t) ≥ 0. The dynamics are

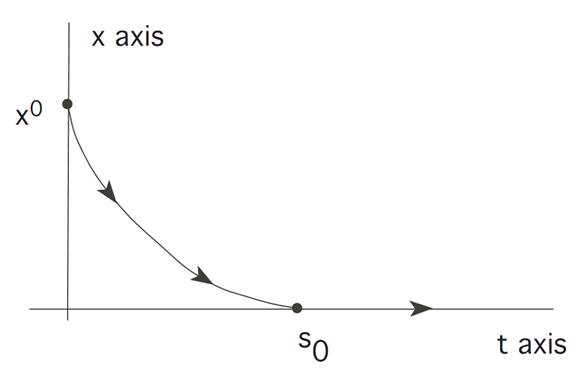

Guessing the optimal strategy. Let us just guess the optimal control strategy: we should at first not order anything (α = 0) and let the inventory in our warehouse fall off to zero as we fill demands; thereafter we should order just enough to meet our demands (α = d).

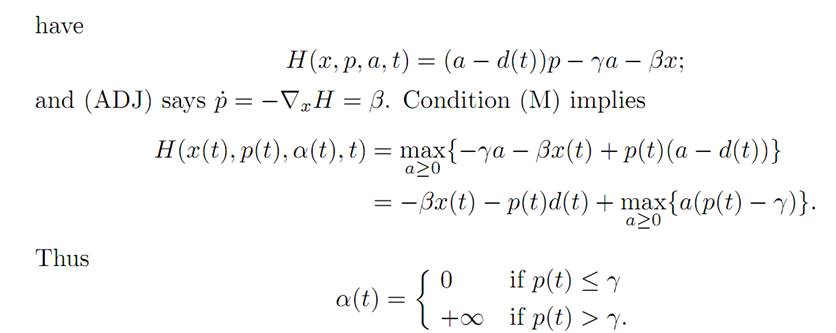

Using the maximum principle. We will prove this guess is right, using the Maximum Principle. Assume first that x(t) > 0 on some interval [0, s0]. We then

If α(t) ≡ +∞ on some interval, then P[α(.)] = −∞, which is impossible, because there exists a control with finite payoff. So it follows that α() ≡ 0 on [0, s0]: weplace no orders.

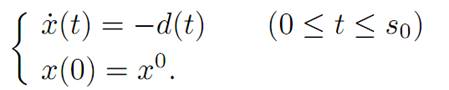

According to (ODE), we have

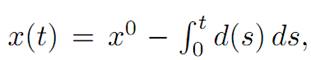

Thus s0 is first time the inventory hits 0. Now since

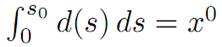

We have x(s0) = 0. That is,

and we have hit the constraint.

Now use Pontryagin Maximum Principle with state constraint for times t ≥ s0

R = {x ≥ 0} = {g(x) := −x ≤ 0}

and

c(x, a, t) = ∇g(x) f(x, a, t) = (−1)(a − d(t)) = d(t) − a.

We have

But c(x(t), α(t), t) = 0 if and only if α(t) = d(t). Then (ODE) reads

x˙ (t) = α(t) − d(t) = 0

and so x(t) = 0 for all times t ≥ s0.

References

[B-CD] M. Bardi and I. Capuzzo-Dolcetta, Optimal Control and Viscosity Solutions of Hamilton-Jacobi-Bellman Equations, Birkhauser, 1997.

[B-J] N. Barron and R. Jensen, The Pontryagin maximum principle from dynamic programming and viscosity solutions to first-order partial differential equations, Transactions AMS 298 (1986), 635–641.

[C1] F. Clarke, Optimization and Nonsmooth Analysis, Wiley-Interscience, 1983.

[C2] F. Clarke, Methods of Dynamic and Nonsmooth Optimization, CBMS-NSF Regional Conference Series in Applied Mathematics, SIAM, 1989.

[Cr] B. D. Craven, Control and Optimization, Chapman & Hall, 1995.

[E] L. C. Evans, An Introduction to Stochastic Differential Equations, lecture notes avail-able at http://math.berkeley.edu/˜ evans/SDE.course.pdf.

[F-R] W. Fleming and R. Rishel, Deterministic and Stochastic Optimal Control, Springer, 1975.

[F-S] W. Fleming and M. Soner, Controlled Markov Processes and Viscosity Solutions, Springer, 1993.

[H] L. Hocking, Optimal Control: An Introduction to the Theory with Applications, OxfordUniversity Press, 1991.

[I] R. Isaacs, Differential Games: A mathematical theory with applications to warfare and pursuit, control and optimization, Wiley, 1965 (reprinted by Dover in 1999).

[K] G. Knowles, An Introduction to Applied Optimal Control, Academic Press, 1981.

[Kr] N. V. Krylov, Controlled Diffusion Processes, Springer, 1980.

[L-M] E. B. Lee and L. Markus, Foundations of Optimal Control Theory, Wiley, 1967.

[L] J. Lewin, Differential Games: Theory and methods for solving game problems with singular surfaces, Springer, 1994.

[M-S] J. Macki and A. Strauss, Introduction to Optimal Control Theory, Springer, 1982.

[O] B. K. Oksendal, Stochastic Differential Equations: An Introduction with Applications, 4th ed., Springer, 1995.

[O-W] G. Oster and E. O. Wilson, Caste and Ecology in Social Insects, Princeton UniversityPress.

[P-B-G-M] L. S. Pontryagin, V. G. Boltyanski, R. S. Gamkrelidze and E. F. Mishchenko, The Mathematical Theory of Optimal Processes, Interscience, 1962.

[T] William J. Terrell, Some fundamental control theory I: Controllability, observability, and duality, American Math Monthly 106 (1999), 705–719.

الاكثر قراءة في نظرية التحكم

الاكثر قراءة في نظرية التحكم

اخر الاخبار

اخر الاخبار

اخبار العتبة العباسية المقدسة